Since its launch this past Nov. 30, the easy-to-use ChatGPT surpassed one million users in a month, reaching the 100-million-user milestone in two months.

The controversial artificial intelligence tool was developed by San Francisco-based tech company OpenAI can be used to produce a great essay with a simple prompt and a complex string of software code in seconds.

But, it also provides an opportunity for university, college and high school students to pass off an AI-generated class assignment as a product of their own research and writing.

To address this new challenge to academic integrity, the University of Ottawa, Carleton University and Algonquin College are in the process of introducing guidelines.

The University of Ottawa’s website has added a special list of “Frequently asked questions about artificial intelligence (AI) and academic integrity” to its Academic Integrity page, explaining how AI tools like ChatGPT can be used in a way that amounts to cheating.

According to uOttawa’s FAQ feature, using ChatGPT does not necessarily mean a violation of academic integrity and notes that some courses and instructors may have some flexibility when it comes to the use of such tools. The university advises students to “check your course syllabus and each assignment’s instructions for the academic integrity provisions.”

If a student is still unsure, “it’s always best to ask your professor,” states the uOttawa guide.

At the same time, the university emphasizes that: “AI needs to be used in a way that maintains academic integrity. For example, using AI-generated text as part of your academic work without permission or a citation is a violation of academic integrity.”

Carleton University has not yet listed on its website an overview of academic integrity issues associated with ChatGPT. At a university senate meeting on Feb. 24, Carleton provost Jerry Tomberlin and David Hornsby, assistant vice-president of teaching and learning, discussed the topic.

The meeting minutes state that Tomberlin and Hornsby “acknowledged the challenges this new technology brings for instructors, particularly with regards to academic integrity. An ad hoc group including (university) senators, associate deans, and others with expertise in this area will convene soon to review this issue.”

The minutes also state that Carleton University will maintain contact with other post-secondary institutions to conduct a cross-sector analysis of AI. This will be followed by a series of engagements with the campus community. The goal is to come up with a set of recommendations and policyies to provide instructional guidance to faculty on this issue.

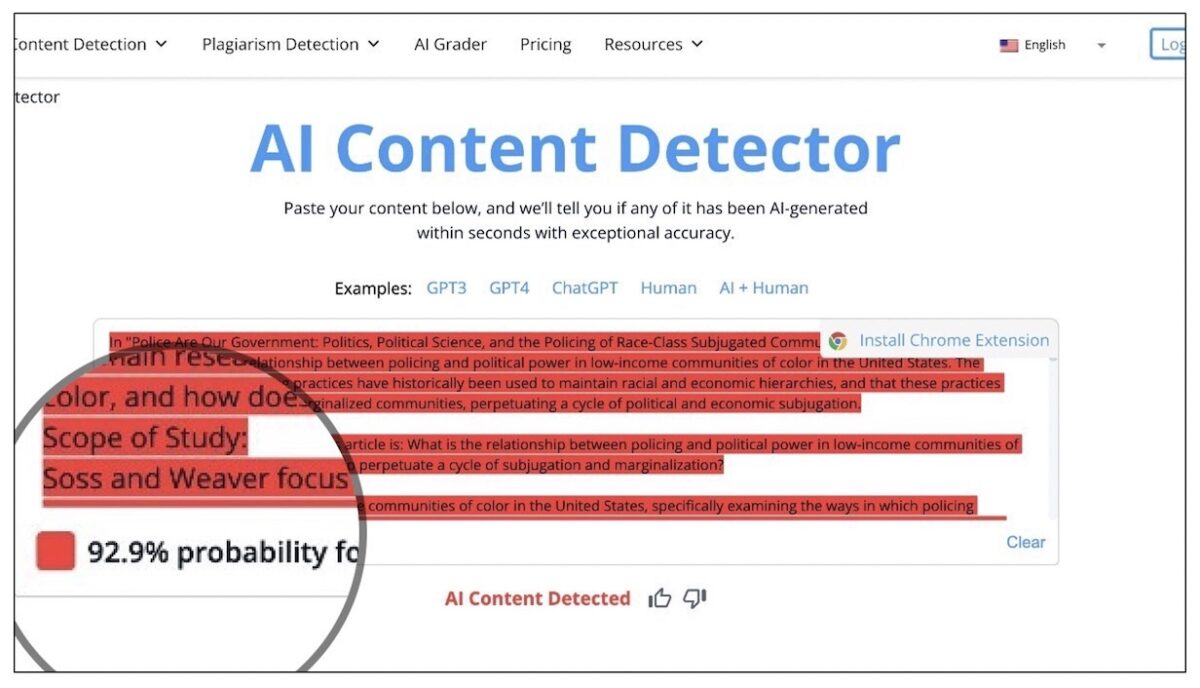

Carleton says it would also consider introducing faculty to certain testing tools designed to detect whether a submitted assignment was produced using AI technology. There are already such products available on the market.

At the same time, some professors have begun experimenting with ChatGPT and similar tools. Dr. Erin Tolley, a professor in Carleton’s political science department, read a summary of her course material done entirely using ChatGPT and entered the document into three separate AI text detectors.

“One recognized it as ‘possibly generated by AI,’ ” said Tolley, “the second said it was 99.98-per-cent fake, and the third as ‘parts written by AI.’ ”

‘If you don’t do the work — you give it to someone or something else — these are all ways of not doing the work.’

— Julia Huckle, chair of academic operations and planning, Algonquin College

Julia Huckle, chair of academic operations and planning at Algonquin College, said ChatGPT does pose a significant challenge when it comes to ensuring students independently completed their assignments.

“These are all different types of AI tools with varied levels of acceptance and utilization in the classroom and/or by students — apps ranging from Grammarly, to resumé-building tools and now language-generating tools like ChatGPT,” said Huckle.

“If you don’t do the work or you give it to someone or something else, these are all ways of not doing the work.”

Huckle is a member of the Academic Integrity Council of Ontario and said she will continue to liaise with her post-secondary colleagues and the collegiate integrity organization to discuss the impact of the new technology and provide students with clearer explanations and expectations.

The AICO also recently released a guide on how AI can affect academic integrity. The guide states: “Preserving academic integrity in our pedagogical approaches, in all educational contexts, should be carefully considered as the capabilities of these tools develop and evolve, impacting organizations in different ways.”

Many universities outside of Ottawa are working on the AI-related academic integrity issue. The University of British Columbia and the University of Waterloo each have online discussions about the challenges posed by ChatGPT on their websites.

It’s expected that all universities in Canada will continue their efforts in the coming months to maintain a fair and honest academic environment during the boom in AI technology.